On 17 December 2021, after eight years of (both informal and formal) discussions about the challenges raised by autonomous weapons systems (AWS), states parties to the Convention on Certain Conventional Weapons (CCW) decided on a mandate that dissatisfied many. The Group of Governmental Experts (GGE) on LAWS should meet for 10 days in 2022 to “consider proposals and elaborate, by consensus, possible measures, including taking into account the example of existing protocols within the Convention, and other options related to the normative and operational framework on emerging technologies in the area of LAWS”.

For many stakeholders, both states parties and civil society representatives, this was a disappointing result, preceded by the GGE failing to agree on a set of shared recommendations at its final meeting in early December 2021. Momentum for a negotiation mandate within the auspices of the CCW had been growing for some time. But it was brought to a halt by some state parties’ uncompromising positions – a group that includes some of the chief developers and users of weaponised artificial intelligence (AI). State parties such as the United States, Israel, India, and Russia continue to dispute the necessity of any novel legal prohibition and regulation of AWS.

This outcome puts the continued future and relevance of discussion on AWS at the CCW into jeopardy. Civil society voices, such as Human Rights Watch, are beginning to more forcefully call for moving the debate for a legal treaty on AWS outside of the CCW and its consensus-bound framework. The successful negotiations of humanitarian disarmament treaties such as the 1997 Mine Ban Treaty, the 2008 Convention on Cluster Munitions, and the 2017 Treaty on the Prohibition of Nuclear Weapons demonstrate the potential advantages of such processes. Yet, such a move still remains somewhat of a sobering alternative to the current forum, given that many of the key developers of AWS will likely not participate in such a process.

Having closely observed the work of the GGE since its first session in November 2017, I can sympathise with the sense of frustration felt by many state parties and civil society actors. This feeling is intensified by the fact that debates among state parties have come a long way since the topic of AWS first entered the international agenda – in terms of substance, sophistication of challenges expressed, and momentum towards regulation and prohibition. Over the course of four years, many state parties at the GGE such as Austria, Chile, Brazil, Mexico, and Belgium have found their voices, expressing ever clearer and more significant positions on the precise challenges raised by AWS.

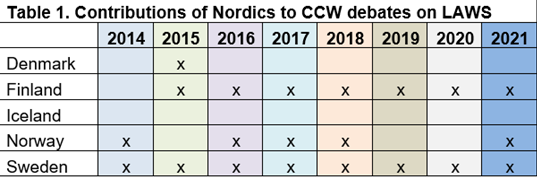

Yet, some state parties remain conspicuously silent on the potential governance of AWS – and this group includes Denmark. In the eight years of discussions at the CCW, Denmark has only delivered a single statement in 2015. Characteristic of statements delivered by state parties at the time, Denmark’s statement is general in character and highlights the future orientation of the CCW’s discussions: “no lethal autonomous weapons system has been developed yet”. Referring to the notion of meaningful human control as “at the very core of our discussions”, Denmark also states that “all use of force must remain under ‘meaningful human control’”. This position echoes two prominent tenets of the debate at the CCW – first, casting AWS as a futuristic concern rather than connecting it to the ongoing integration of autonomous features in weapon systems already in use; second, highlighting the role that humans should continue to play in decision-making on the use of force even as more cognitive skills are ‘delegated’ to AI systems, expressed by notions such as “meaningful human control”, the human element, or human supervision.

But Denmark has been completely silent on the topic since the creation of the GGE. This record deviates from that of other Nordic countries who have, with the exception of Iceland, been regular contributors to the CCW debates.

Given the growing media profile and significance of the topic, Denmark’s persistent silence is a surprising observation that I want to unpack in the remainder of this essay.

I will do so in two steps: first, I provide a short overview of the extent to which the Danish military already uses weapon systems with automated and autonomous features. While debates about AWS at the GGE have typically remained focused on the future, characterising themselves as “pre-emptive norm-making”, there is a longer historical trajectory of weapons systems with automated and autonomous features in targeting starting in the 1950s. Examples of such systems include guided missiles, air defence systems, active protection systems, counter-drone systems, and loitering munitions. The extent to which these systems can operate in automated and autonomous ways is often not clearly accessible from open-source data. But looking at such systems can still tell us something about the development trajectory of systems with autonomy in targeting – and the quality of human control they are operated under. Here, I will also briefly consider Denmark’s history of drone development and usage. While drones often remain under the remote control of a human operator, their trajectories of technological development and affordances connect closely to AWS.

Second, I consider Denmark’s meeting points with weaponised AI at a doctrinal level by looking into its participation in strategic partnerships with an AI component, for example within NATO. I close with a critical conclusion, calling on Denmark to finally find its voice in the debate about AWS if it does not want to become a recipient of rules and norms shaped by others in both explicit and implicit ways.

Denmark and weapons with automated and autonomous functions

While Denmark is not a developer nor a ‘big player’ when it comes to AWS, it “already possesses weapon systems with some functions that enable the weapon to behave beyond direct human control”. According to a 2017 survey of Danish personnel serving in the Danish Acquisition and Logistics Organisation, Defence Command at the Danish Ministry of Defence both the Royal Danish Navy and the Royal Air Force possess at least five guided missiles as well as at least four countermeasure systems with autonomous features.

Danish military personnel characterised missile systems such as the Harpoon Block II anti-ship missile, the Evolved Sea Sparrow air defence missile, and the AGM 65 Maverick air-to-ground missile as “semi-autonomous” following the definition provided by the US DoD Directive 3000.09: “a weapon system that, once activated, is intended to only engage individual targets or specific target groups that have been selected by a human operator”. Distinguishing between autonomous and semi-autonomous systems in this way is contested. This is because target selection, a process that the Directive does not clearly delineate, becomes the only marker of this distinction, making it “a distinction without difference” in the sense that we are not made to understand what target selection actually implies.

In any case, the usage of weapon systems with autonomous features in the Danish military means that its personnel already experiences the challenges of complex human-machine interaction that are typical of ‘delegating’ cognitive tasks to machines. Complex human-machine interaction sets operational limits to the possibility of human operators retaining meaningful control over the use of force. Typical challenges inherent to human-machine interaction include the operation of systems with autonomous features at machine speed, the complex nature of the tasks that human operators are asked to perform in collaboration with machine systems, and the type of demands this places human operators under. As my previous research on air defence systems demonstrates, these challenges can make the exercise of meaningful human control in specific use of force situations incrementally meaningless rather than meaningful. But, in the Danish military context, such challenges have “perhaps […] not been subjected to significant reflection”.

When it comes to drones, Denmark’s operational military capacities also remain more limited than those of its allies. Most importantly, Denmark does not possess armed drones and does not appear interested in their acquisition. As a result of its close alliance with the United States, the Danish Intelligence services (Politiets Efterretningstjeneste, PET) have, however, been reported as having collected data used for the purpose of targeted killing via drone strikes by the US in Yemen. Chiefly, this related to recruiting a Danish citizen as a double agent and thereby contributing information to the heavily contested CIA drone strike that killed US-American citizen Anwar al-Awlaki in Yemen in September 2011. The Danish government has consistently denied its role, but other, similar potential collaborations with the US drone programme have also thrown light on the roles played by other US allies, such as Germany and the UK.

Since the 1990s, Denmark has a history of using different models of mostly mini and often hand-launched drones for intelligence and reconnaissance purposes. These included the Tårnfalken (an adapted version of the French Sperwer, until 2005), the US-manufactured Raven B (2007-2011), and the PUMA AE. With the 2018 Defence Agreement, Denmark formulated an interest in building a national capacity for developing drones. Such initiatives have led to the manufacturing of the Nordic Wing Astero, so-called “super-drone”, a mini, fixed-wing, hand-launched drone for surveillance and reconnaissance missions. The Astero model seems similar in size and functionality to the US-manufactured Raven, which has been operational since the early 2000s. Like the Raven, the Astero can be operated in remote-controlled modes but can also conduct its flight autonomously (with potential technological upgrades always possible). The main difference between the Raven and the Danish-manufactured drone seems to be that it can stay in the air for a considerably longer period of time. This Danish-manufactured drone is unlikely to be armed and therefore to alter Denmark’s current approach to armed drones.

The 2018 Defence Agreement also included an ambition to explore “the potential of drones in monitoring its Arctic regions”, followed by a financial commitment of 1.5 billion Danish crowns (ca. € 180m) for this purpose. In September 2021, the Danish MoD further announced “plans to establish a competence center for smaller drones at Hans Christian Andersen airport” in Odense which already hosts Denmark’s International Test Center for Drones.

Denmark’s participation in strategic partnerships on AI

Denmark’s development and usage of weaponised applications of AI at home therefore still remains limited. But through participating in strategic partnerships on AI led by its allies, Denmark is already – and will get in ever closer contact with – military applications of AI. I want to highlight two partnerships here: the Artificial Intelligence Strategy for NATO and the AI Partnership for Defense.

NATO Defence Ministers adopted the Artificial Intelligence Strategy in October 2021 to enhance the alliance’s cooperation and interoperability “to mitigate security risks, as well as to capitalise on the technology’s potential to transform enterprise function, mission support, and operations”. This phrasing clearly demonstrates the two perspectives that NATO takes on military AI: as a potential security risk, if deployed by “competitors and potential adversaries”, and as a force multiplier and “enabler”, if deployed by the alliance itself. The strategy is structured around six key principles that commit NATO members to only “develop and consider for deployment” military applications of AI that are: lawful, responsible and accountable, explainable and traceable, reliable, governable, and to engage in bias mitigation. Notions of human control feature in the principles of responsibility and accountability, as well as governability.

Denmark is also part of the US DoD-led AI Partnership of Defense (PfD), established in 2020. This consists of 16 countries in Europe and Asia with the aim of collaborating on military applications of AI: Australia, Canada, Denmark, Estonia, Finland, France, Germany, Israel, Japan, Norway, the Netherlands, the Republic of Korea, Singapore, Sweden, the UK, and the US. The PfD’s goal is “to create new frameworks and tools for data sharing, cooperative development, and strengthened interoperability”. Rather than focusing on jointly developing military applications of AI, the PfD seeks for its collaborating partners to be “AI ready”, which can be taken to mean very different things.

What does Denmark’s participation in these initiatives mean for its approach to military AI? A lead actor in both initiatives, the US has by far the most advanced operational capabilities in military applications of AI among the whole list of collaborators and allies. The publicised focus of these initiatives means that collaborators will come into close contact with functional applications of AI that the US uses and that are developed by US-based companies. Why is this significant? We know from our previous research on air defence systems that practices at the design stage of military AI are crucial for shaping what the AI system is capable of and how it ‘makes decisions’. From a Science and Technology Studies (STS) perspective, technologies come with particular affordances, they enable and constrain how they can be used and therefore types of behaviour. The design of technologies, such as military applications of AI, therefore has a direct impact on the conditions of possibility for its potential regulation – by shaping what come to be counted as ‘appropriate’ action. Denmark may therefore very likely become indirectly influenced by the technological standards set in such contexts.

To conclude, Denmark is coming into ever closer contact with weaponised AI, as well as military applications of AI. This close contact is bound to shape what Denmark considers as ‘appropriate’ forms of conduct in using AI in the defense context –in both direct and indirect ways. In this light, it is remarkable that Denmark does not use the platforms that it has access to, such as the GGE on LAWS, to shape the discourse on AI regulation. It is high time for Denmark to consider, in public and openly, what it considers as appropriate forms of developing and using military applications of AI – and, importantly, were the limits to these technologies should be set.